If you are focused on creating the best customer experience you know the importance of gathering authentic customer feedback and how it is important for taking the right action. However, the integrity of this feedback is highly dependent on your survey design.

How? A poorly crafted survey with biased questions becomes a liability as it can lead to biased responses, misinterpretation of data, and ultimately leads to misguided business decisions. These biased questions not only skew the data but also waste a valuable opportunity to gather actionable insights.

So, how do you avoid using these questions in your survey? Let’s find out.

What are Biased Survey Questions?

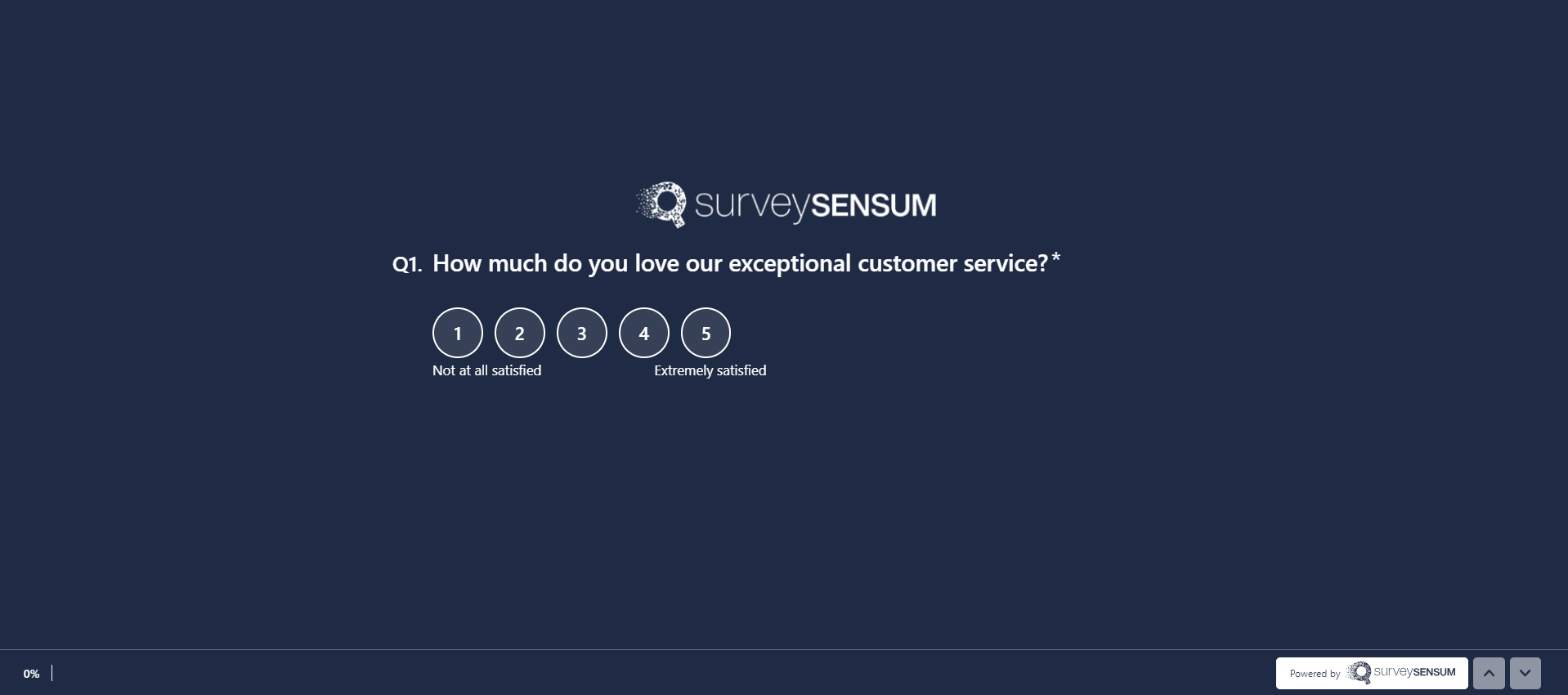

“How much do you love our exceptional customer service?” – Now, what’s wrong with this question? The question assumes that the customer service is exceptional and prompts respondents to provide a positive response. This is called a biased survey question.

A biased survey question leads respondents towards a particular answer, thereby distorting the accuracy and reliability of the gathered data. These questions are formatted or phrased in such a way that it confuses the respondents, making it difficult for them to answer honestly.

Now, let’s understand how you can identify these types of questions in a survey, and avoid and fix them.

7 Common Types of Biased Survey Questions

Here are the 7 common types of biased survey questions that can be seen in a survey.

1. Leading Questions

Imagine receiving a CSAT survey like this – “How much did you enjoy our customer service”. Now what’s wrong with this question? It automatically assumes that the customer service is excellent and subtly pressurizes the respondent into an agreement. Such questions confuse respondents and force them to provide feedback that is skewed one way or another.

How to fix this type of leading question? Use neutral, objective wording. Avoid using subjective adjectives or context-laden words that frame the phrases in a positive or negative tone. For example, for the above question, the correct choice word should be – “On a scale of 1-5, please rate your experience with our customer service agent.”

| Incorrect Question | Correct Question |

| How much do you love our new product? | On a scale of 1-7, please rate your experience with our new product. |

Confused about launching your first survey? Don’t worry with free consultation from our top CX expert, you get implementation help along with how to analyze your data effectively to extract actionable insights.

2. Loaded Questions

Loaded questions contain assumptions within the question that may or may not be true for all respondents. By replying back to this type of question, a respondent inadvertently ends up agreeing or disagreeing with an implicit statement.

For example, in a CSAT survey for a cafe, the question is framed like this – “How much do you like our coffee”. Now, this question already assumes that the respondent drinks coffee and also enjoys the cafe’s coffee. Maybe the customer goes to the cafe for their iced tea, but the question has already been assumed otherwise. This type of question can alienate respondents who do not fit the assumed scenario, leading to inaccurate or incomplete data.

How to fix this type of question? Simple, just don’t make any unfounded assumptions. Use words that don’t sound like you are making an assumption. For example, the correct wordings for the above question should be like this – “Do you enjoy drinking our coffee? (yes/no)” and then you can also continue with an open-ended question for further analysis – “Which one of our coffee do you enjoy the most?” Now, with this question, there is no assumption, just a simple yes or no question followed by an open-ended question.

| Incorrect Question | Correct Question |

| Why do you think our customer service is the best? | On a scale of 1-7, please rate your experience with our customer service. |

3. Double-Barreled Questions

These types of questions ask about two different issues in one question, simultaneously, making it difficult for respondents to provide authentic feedback.

For example, “How satisfied are you with our product’s quality and price?” Now, how can you provide ratings for both quality and price of a product, it can either be quality or price. Now, in this case, the respondent will definitely provide a rating for just one attribute but you won’t have any idea on which one.

This type of question is used to shorten survey time but it ends up costing the business more as it wastes time in launching questions that won’t yield any authentic results.

How to fix this type of double-barreled question? Split the two issues and form a single-barreled question. For example, for the above question, either ask about the quality or product, not both – one question for quality, “How satisfied are you with the product’s quality?” and another for price, “How satisfied are you with the product’s quality?”.

| Incorrect Question | Correct Question |

| How satisfied are you with our pricing and customer support? | On a scale of 1-10, please rate your satisfaction with our pricing. |

4. Vague Questions

This is exactly what it sounds like – vague. These types of questions can be interpreted in various ways, leading to incoherent results since the range of answers will vary wildly.

For example, “Did you like our product?” Now, this question leaves a lot of room for interpretation. The question didn’t specify which aspect of the product it wanted to gather customer feedback on, confusing the respondent and resulting in the gathering of inauthentic feedback.

How to fix this type of question? Simply, reframe the question and focus on a particular metric that is related to our survey goal. For example, the correct wording for the above question should be – “Are you satisfied with the product’s pricing?” Now the question provides clarity on which aspect or metric it is focusing on.

| Incorrect Question | Correct Question |

| Do you use our product regularly? | How often do you use our product? Options: Daily, Weekly, Monthly, Rarely, Never |

5. Double Negatives

While designing your survey, don’t forget to check your grammar. This often leads to confusion and skews the survey result. For example, using double negatives in a question such as “Do you agree that our product should not be unavailable?” Read the question again and tell me if you got confused or not.

How to fix this type of question? To correct a double negative, rephrase the question using a positive or neutral version of the phrase. For example, “How would you feel if our product is no longer available to use?”

| Incorrect Question | Correct Question |

| Do you agree that the company should not discontinue the product? | Do you think the company should continue offering the product? Options: Yes/No |

6. Poor Scale Options

Using different types of rating scales makes your surveys interesting and also enables you to gather close-ended feedback. However, these types of questions can become a liability and skew your survey result if not used correctly. If the scale is not defined correctly it won’t capture the right customer sentiment.

For example, you have asked an NPS question – “On a scale of 0-10, how likely are you to recommend our product to our friends or colleagues?” But you have used the scale of “Very Unsatisfied” to “Very Satisfied”. Now, here the correct scale should be “Not at all likely” to “Likely”.

How to fix this type of question? Define your survey objective correctly which will help you in determining the correct scale for your question. For example, for CSAT the scale should be “Very unsatisfied” to “very satisfied”. Also, use the right survey builder tool that comes with pre-designed questions with the right scale.

| Incorrect Question | Correct Question |

| How would you rate our service? Options: Excellent, Very Good, Good |

How would you rate our service? Options: Excellent, Good, Fair, Poor, Very Poor |

With SurveySensum survey builder you don’t have to worry about the survey scale. The tool comes with pre-designed questions with the right survey scale for your survey.

7. Agreement Bias

Ever read any user agreements? I am sure you have skipped through it and just clicked “yes” or “okay”. This is because they are long and tedious and you just want to skip ahead, the same goes for surveys.

For example, imagine receiving a survey on your recent purchase of an air conditioner, but the question within the survey is asking about the likelihood of recommending the product – an NPS question. Now, how would you rate the likelihood if you received the product just 4 days ago and haven’t had the time to explore? In this case, you will either ignore the survey or provide mindless answers that are inauthentic.

This type of bias happens when the survey is too long or irrelevant to the customer’s experience and they just want to get it over with. Now, with this bias, two things can happen, either the respondent will answer everything in a random or positive light or negative light, resulting in the gathering of inauthentic survey data.

How to fix this type of question? Define your survey objective. This will help you understand your survey goals and narrow your questions that are relevant to the objective. Also, try to keep the surveys 5-7 minutes and ask only relevant questions.

Examples of Biased Survey Questions

Here are some biased survey questions examples that you can take reference from to avoid bias in your next survey.

1. Leading Questions

- “How much do you love our new product?” This assumes the respondent loves the product.

- “Don’t you think our service is excellent?” This suggests a positive answer.

2. Loaded Questions

- “Why do you think our product is the best on the market?” This assumes the product is the best.

- “How much better is our product compared to competitors?” This implies the product is better.

3. Double-Barreled Questions

- “How satisfied are you with our customer service and our product quality?” This combines two different issues into one question.

- “Do you find our website easy to navigate and informative?” This mixes two aspects of the website experience.

4. Absolute Questions

- “Are you never satisfied with our competitors’ products?” This forces an absolute negative response.

- “Do you always use our product?” This requires a yes or no answer that may not accurately reflect occasional use.

5. Assumptive Questions

- “How often do you enjoy our weekly newsletters?” This assumes the respondent enjoys the newsletters.

- “What do you like most about our customer service?” This assumes there is something the respondent likes.

Key Takeaway

Customer surveys are a great way to boost your customer satisfaction and to understand your customer’s perception of your product, service, and overall brand. But more often than not, these surveys end up costing businesses because of poorly constructed surveys with biased questions. This ends up skewing survey data and becomes a waste of time and effort on both the business and the respondent’s part.

Now, to avoid the types of biases discussed in the blog, you need to implement surveys with the help of a robust survey builder tool, like SurveySensum which already comes with in-built survey templates and pre-designed questions. Not only that, the tool will also help you analyze your survey data with advanced capabilities like text analytics software, cross-tab analysis, etc, and help you take prioritized action that will have the most impact on your bottom line.